Nineteen days ago, Allison underwent surgery for a Cochlear Implant (CI).

Six days ago, the implant was 'hooked up' – the computer in her head was turned on.

To say it was a miracle would be doing a disservice to the engineers and computer scientists who developed, and are continuing to develop, the device's hardware, its software, and its underlying technology. To say it was magic would be overlooking the doctors who perfected the surgery, the first patients who willingly underwent the surgery in spite of great unknown risks, and the audiologists who continue to make it all come together. For these people – the scientists, engineers, programmers, surgeons, audiologists – the fact that my sister can hear after 38 years is not magical, not a miracle. Rather, it's the result of decades of hard work, commitment, and perseverance. Quite simply, Allison can hear because people she never knew dedicated their lives to a cause greater than themselves. So one big clap for them!

But I am getting ahead of myself.

First, the implant:

There are three main components to the CI – A) the processor, which she wears hooked on her ear, much like a hearing aid without the ear mold; B) the external head piece, which attaches to the internal piece via a magnet; and C) the internal electronics implanted in her head, just under her skin.

How these three pieces work together is a bit more complicated, so let's start with an incoming sound and follow it to the brain.

The microphone is located on the processor, the piece that fits behind her ear, and it works kind of like an ear drum, except instead of a membrane, it's a piece of metal backed by a plastic plate. When the metal piece vibrates from the sound, it induces a charge in the plastic, a process of converting sound continuously into electricity. This electric charge, having preserved all the information of the sound, is now ready for processing.

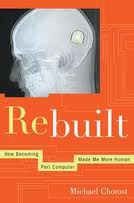

First stop is the analog to digital converter. Michael Chorost, author of Rebuilt, describes it like this: Imagine the incoming electric charge as a river. The converter samples, or 'dips a figurative finger' into, this river 17,000 times per second. Each time, volume and pitch are measured and converted into 1's and 0's, which is a digital representation of the sound wave. Incomes electric charge and out goes a stream of numbers.

First stop is the analog to digital converter. Michael Chorost, author of Rebuilt, describes it like this: Imagine the incoming electric charge as a river. The converter samples, or 'dips a figurative finger' into, this river 17,000 times per second. Each time, volume and pitch are measured and converted into 1's and 0's, which is a digital representation of the sound wave. Incomes electric charge and out goes a stream of numbers.

Next comes the Automatic Gain Control subroutine (subroutine is just a computing term that means 'a set of instructions designed to perform a frequently used operation within a program'). What this does is take the enormous range of sound that occurs in the natural world and squeeze it down into a manageable format. *I will go into greater detail about this process in a later post, as explaining why a CI needs an AGC depends on an understanding of how sound works as well as how the un-impaired ear deals with it. For now, suffice it to say that the difference between very quiet sounds and very loud sounds is much, much greater than we comprehend it to be.

From the Automatic Gain Control, the signal is sent through a set of filters that separate the sound according to frequency (frequency is the vibration rate of the sound wave, which determines its pitch. That is, if the wave is vibrating slowly, we hear a low sound, and if the wave is vibrating quickly, we hear a high sound.) These are called Bandpass filters. I'm not exactly sure how many there are in Allison's implant. In 2005, when Chorost published his book (from which I take a lot of my information), his implant had sixteen different filters. Regardless, what the filters do is separate the signal into different frequency groups, which allow the signal to be manipulated according to frequency. You can think of it like an EQ on your stereo. You can boost the lows if you want more bass (yeah, baby!), and pull the highs down if you don't like how the drummer is playing her symbols. (But please keep in mind, drummers are people too.)

Once the sound is filtered, then, it is customized according to individual needs and/or preferences. This is called a MAP, and each person's MAP is unique. According to Amy Gensler, Allison's audiologist, there are many factors that determine how a MAP is programmed. One factor is how the individual's hair cells were damaged. Going back to how the inner ear works, recall the inner and outer hair cells that line the inside of the cochlea. The hair cells at the basal end, near the middle ear, correspond to high frequency sounds and the hair cells at the apical end, the end point of the spiral basically, correspond to low frequencies. This means, of course, that how and what Allison heard (after Spinal Meningitis but before the implant) depended, in large part, on which hair cells were damaged, and how badly.

Once the sound is filtered, then, it is customized according to individual needs and/or preferences. This is called a MAP, and each person's MAP is unique. According to Amy Gensler, Allison's audiologist, there are many factors that determine how a MAP is programmed. One factor is how the individual's hair cells were damaged. Going back to how the inner ear works, recall the inner and outer hair cells that line the inside of the cochlea. The hair cells at the basal end, near the middle ear, correspond to high frequency sounds and the hair cells at the apical end, the end point of the spiral basically, correspond to low frequencies. This means, of course, that how and what Allison heard (after Spinal Meningitis but before the implant) depended, in large part, on which hair cells were damaged, and how badly.

Okay, so now that we have a processed signal, it is ready to be sent up the wire to the external head piece.

To be continued . . ..

I'm ready for the next part. Thank you so much for writing about both Allie's experience, and providing a broader context to begin to understand hearing.

ReplyDelete